Intro to Kyverno

In my spare time, I've been delving into various software offerings within the CNCF landscape that I've heard about but haven't had the chance to thoroughly explore and experiment with in my own projects.

One such exploration is focused on Kyverno. Derived from the Greek word "govern," Kyverno is an intriguing CNCF incubating project. It facilitates policy management using YAML, aligning with the broader trend of adopting declarative and code-centric approaches in the Kubernetes ecosystem. Drawing parallels with the combination of OPA and Gatekeeper, Kyverno excels in enforcing policies during the admission of objects to Kubernetes clusters.

An illustrative example of Kyverno's functionality involves a straightforward policy requiring a Namespace to carry a specific label indicating its purpose, such as "Production" or "Development." This showcases Kyverno's ability to ensure consistency and organization in Kubernetes configurations.

1apiVersion: kyverno.io/v1

2kind: ClusterPolicy

3metadata:

4 name: require-ns-purpose-label

5spec:

6 validationFailureAction: Enforce

7 rules:

8 - name: require-ns-purpose-label

9 match:

10 any:

11 - resources:

12 kinds:

13 - Namespace

14 validate:

15 message: "You must have label `purpose` with value set on all new namespaces."

16 pattern:

17 metadata:

18 labels:

19 purpose: "?*"

This namespace would create properly.

1apiVersion: v1

2kind: Namespace

3metadata:

4 name: prod-bus-app1

5 labels:

6 purpose: production

This namespace would fail creation and not be admitted to the cluster.

1apiVersion: v1

2kind: Namespace

3metadata:

4 name: prod-bus-app1

5 labels:

6 team: awesome-prod-app

I am allowing namespaces to be created with any purpose, however the list of allowed values could be constrained by regular expressions.

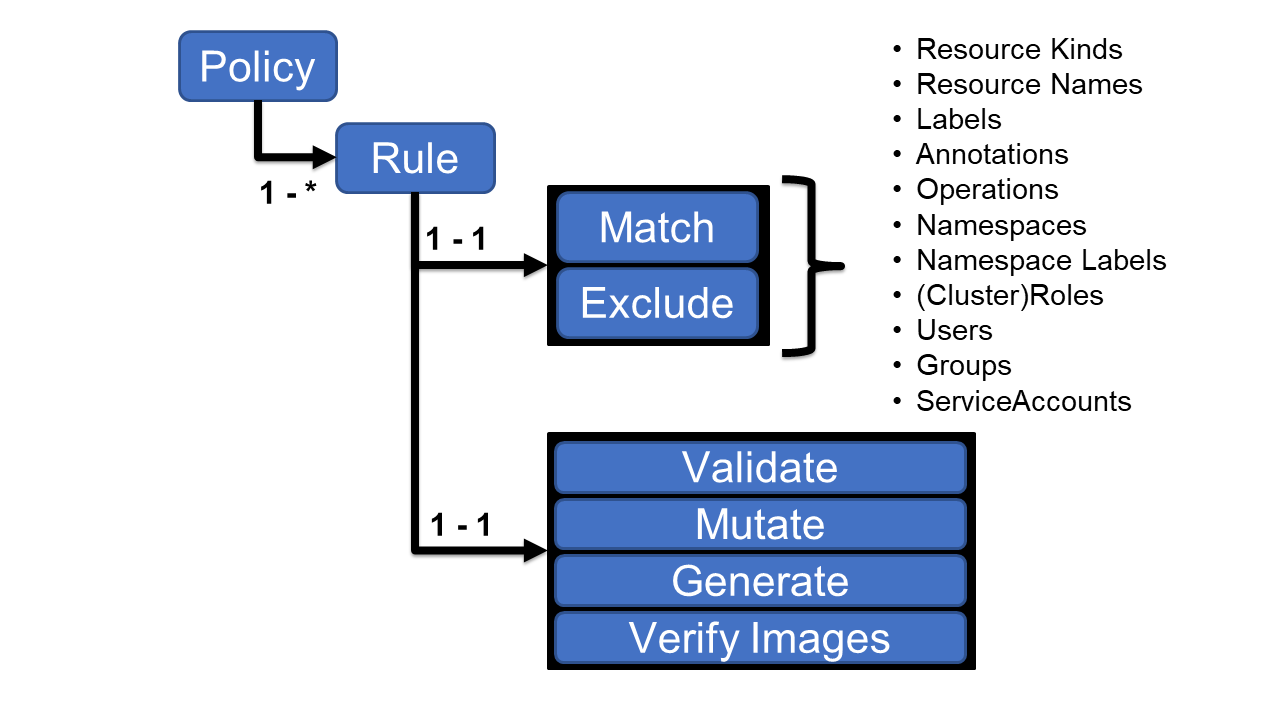

Anatomy of a Rule

A Kyverno Policy, composed in YAML as depicted above, comprises several key sections, each serving distinct purposes:

- One or More Rules: Each rule is designed to either match or exclude an object based on its name or specific properties.

- Rule Actions: Once a rule is triggered, it can undertake various actions, including object validation, object mutation, object generation, or image verification. This modular approach provides flexibility in shaping and managing Kubernetes resources based on defined criteria.

Mutation

Kyverno empowers administrators to seamlessly mutate objects within a Kubernetes cluster. For instance, as a system administrator, ensuring that all images in the cluster are consistently fetched might be a priority. While the ideal scenario would involve developers explicitly adding the Always imagePullPolicy, Kyverno offers an additional layer of convenience by enabling automatic object mutation.

In practical terms, a rule can be defined in Kyverno, specifying that any object of type Pod should have its imagePullPolicy automatically set to Always. This illustrates how Kyverno streamlines the process of enforcing desired configurations across objects within the Kubernetes environment.

1apiVersion: kyverno.io/v1

2kind: ClusterPolicy

3metadata:

4 name: always-pull-images

5 annotations:

6 policies.kyverno.io/title: Always Pull Images

7 policies.kyverno.io/category: Sample

8 policies.kyverno.io/severity: medium

9 policies.kyverno.io/subject: Pod

10 policies.kyverno.io/minversion: 1.6.0

11 policies.kyverno.io/description: >-

12 By default, images that have already been pulled can be accessed by other

13 Pods without re-pulling them if the name and tag are known. In multi-tenant scenarios,

14 this may be undesirable. This policy mutates all incoming Pods to set their

15 imagePullPolicy to Always. An alternative to the Kubernetes admission controller

16 AlwaysPullImages.

17spec:

18 rules:

19 - name: always-pull-images

20 match:

21 any:

22 - resources:

23 kinds:

24 - Pod

25 mutate:

26 patchStrategicMerge:

27 spec:

28 containers:

29 - (name): "?*"

30 imagePullPolicy: Always

Creation

Kyverno can also be used to create objects as a result of creation of another object.

For example, we would like to add a Quota to every new Namespace.

The following example creates a new ResourceQuota and a LimitRange object whenever a new Namespace is created.

1apiVersion: kyverno.io/v1

2kind: ClusterPolicy

3metadata:

4 name: add-ns-quota

5 annotations:

6 ...

7spec:

8 rules:

9 - name: generate-resourcequota

10 match:

11 any:

12 - resources:

13 kinds:

14 - Namespace

15 generate:

16 apiVersion: v1

17 kind: ResourceQuota

18 name: default-resourcequota

19 synchronize: true

20 namespace: "{{request.object.metadata.name}}"

21 data:

22 spec:

23 hard:

24 requests.cpu: '4'

25 requests.memory: '16Gi'

26 limits.cpu: '4'

27 limits.memory: '16Gi'

28 - name: generate-limitrange

29 match:

30 any:

31 - resources:

32 kinds:

33 - Namespace

34 generate:

35 apiVersion: v1

36 kind: LimitRange

37 name: default-limitrange

38 synchronize: true

39 namespace: "{{request.object.metadata.name}}"

40 data:

41 spec:

42 limits:

43 - default:

44 cpu: 500m

45 memory: 1Gi

46 defaultRequest:

47 cpu: 200m

48 memory: 256Mi

49 type: Container

Other Uses

In addition to the above use cases Kyverno has a few other capabilities.

-

Cleanup - Kyverno allows you to specify actions for object deletion. For instance, when a new version of a Deployment is created, it can initiate the creation of a new ReplicaSet with the desired replicas while scaling the current one down to zero.

-

Image Verification - Kyverno facilitates image verification before execution. This involves ensuring that the image adheres to specified requirements, such as the necessity for a signed bundle and a vulnerability scan conducted by Grype, with no vulnerabilities exceeding a threshold of 8.0.

For a comprehensive list of example Kyverno polies check here

Installation.

Installation of Kyverno is quite straightforward. You can read about it in detail in the Kyverno Docs

I have used Flux's ability to install Helm charts so that I can provision Kyverno and any associated policies via GitOps

1# First Install the Helm Repository that contains Kyverno

2apiVersion: source.toolkit.fluxcd.io/v1beta2

3kind: HelmRepository

4metadata:

5 name: kyverno

6 namespace: tanzu-helm-resources

7spec:

8 interval: 5m0s

9 url: https://kyverno.github.io/kyverno/

1---

2# Install a fixed version of Kyverno

3apiVersion: helm.toolkit.fluxcd.io/v2beta1

4kind: HelmRelease

5metadata:

6 name: kyverno-release

7 namespace: tanzu-helm-resources

8 annotations:

9 kapp.k14s.io/change-group: "crossplane/helm-install"

10 kapp.k14s.io/change-rule: "upsert after upserting crossplane/helm-repo"

11spec:

12 chart:

13 spec:

14 chart: kyverno

15 reconcileStrategy: ChartVersion

16 sourceRef:

17 kind: HelmRepository

18 name: kyverno

19 version: 3.1.4

20 install:

21 createNamespace: true

22 interval: 10m0s

Provisioning Namespaces

In some of my past discussion of Tanzu Application Platform (TAP) Installation I pointed out a crucial step that must be peformed before a developer canm start using a namespace.

There are multiple objects that need to be provisioned.

These include:

- Secret to reach Github

- Scan Policy

- Registry Secret

- Tekton Testing Pipeline

- RBAC

In early versions of TAP I used carvel's ability to copy a remote Git repo into a namespace.

The below example creates a namespace and then creates a Carvel App to keep that namespace in sync with the contents of

- clusters/common/app-contents/dev-namespace

- clusters/common/app-contents/test-scan-namespace

The deploy intoNS at the very end accomplishes this.

1#@ load("@ytt:data", "data")

2---

3apiVersion: v1

4kind: Namespace

5metadata:

6 name: canary

7---

8apiVersion: kappctrl.k14s.io/v1alpha1

9kind: App

10metadata:

11 name: dev-ns-canary

12 namespace: tap-install

13 annotations:

14 kapp.k14s.io/change-rule: "upsert after upserting tap"

15spec:

16 serviceAccountName: tap-install-gitops-sa

17 syncPeriod: 1m

18 fetch:

19 - git:

20 url: #@ data.values.git.url

21 ref: #@ data.values.git.ref

22 template:

23 - ytt:

24 paths:

25 - clusters/common/app-contents/dev-namespace

26 - clusters/common/app-contents/test-scan-namespace

27 valuesFrom:

28 - secretRef:

29 name: tap-install-gitops

30 deploy:

31 - kapp:

32 intoNs: canary

values for the Git url and Git are configurable via the tap-instgall-gitops secret

Later versions of TAP introduced a controller that allows you to specify a Git repo to copy the contents into namespaces. While this worked well it did require some configration within the tap-values. In addition it was assumed that the default setup would create the namespace and maintain its entire lifecycle. It did not have the ability to account for namespaces that were created outside of the tap installation.

Kyverno to provision namespaces.

Next, I will show you two possible ways of using Kyverno to automatically configure your workspaces.

Use Kyverno policy to create what you need.

We can apply the required objects directly using Kyverno policy.

1apiVersion: kyverno.io/v1

2kind: ClusterPolicy

3metadata:

4 name: add-ns

5 ..

6spec:

7 rules:

8 - name: generate-gitsecret

9 match:

10 any:

11 - resources:

12 kinds:

13 - Namespace

14 generate:

15 apiVersion: secretgen.carvel.dev/v1alpha1

16 kind: SecretImport

17 metadata:

18 name: git-https

19 annotations:

20 tekton.dev/git-0: https://github.com

21 spec:

22 fromNamespace: tap-install

23 - name: generate-scanpolicy

24 match:

25 any:

26 - resources:

27 kinds:

28 - Namespace

29 generate:

30 apiVersion: scanning.apps.tanzu.vmware.com/v1beta1

31 kind: ScanPolicy

32 metadata:

33 name: lax-scan-policy

34 labels:

35 app.kubernetes.io/part-of: scan-system #! This label is required to have policy visible in tap-gui, but the value can be anything

36 spec:

37 regoFile: |

38 package main

39

40 # Accepted Values: "Critical", "High", "Medium", "Low", "Negligible", "UnknownSeverity"

41 notAllowedSeverities := ["UnknownSeverity"]

42

43 ignoreCves := []

44

45 contains(array, elem) = true {

46 array[_] = elem

47 } else = false { true }

48

49 isSafe(match) {

50 severities := { e | e := match.ratings.rating.severity } | { e | e := match.ratings.rating[_].severity }

51 some i

52 fails := contains(notAllowedSeverities, severities[i])

53 not fails

54 }

55

56 isSafe(match) {

57 ignore := contains(ignoreCves, match.id)

58 ignore

59 }

60

61 deny[msg] {

62 comps := { e | e := input.bom.components.component } | { e | e := input.bom.components.component[_] }

63 some i

64 comp := comps[i]

65 vulns := { e | e := comp.vulnerabilities.vulnerability } | { e | e := comp.vulnerabilities.vulnerability[_] }

66 some j

67 vuln := vulns[j]

68 ratings := { e | e := vuln.ratings.rating.severity } | { e | e := vuln.ratings.rating[_].severity }

69 not isSafe(vuln)

70 msg = sprintf("CVE %s %s %s", [comp.name, vuln.id, ratings])

71 }

This approach has a drawback as it necessitates modifying the policy each time there are changes to the namespace contents. Personally, I prefer the method of employing Kapp Controller from the Carvel Project to effectively manage the contents within the namespace.

1apiVersion: kyverno.io/v1

2kind: ClusterPolicy

3metadata:

4 name: add-ns-kapp

5 annotations:

6 ...

7 policies.kyverno.io/description: >-

8 To better control the number of resources that can be created in a given namespace This policy will generate App and use GitOps to provision the objects needed.

9spec:

10 - name: generate-kapp

11 match:

12 any:

13 - resources:

14 kinds:

15 - Namespace

16 generate:

17 apiVersion: kappctrl.k14s.io/v1alpha1

18 kind: App

19 metadata:

20 name: "{{request.object.metadata.name}}-ns"

21 namespace: tap-install

22 spec:

23 serviceAccountName: tap-install-gitops-sa

24 syncPeriod: 1m

25 fetch:

26 - git:

27 url: https://github.com/tanzu-end-to-end/tap-gitops-reference

28 ref: main

29 template:

30 - ytt:

31 paths:

32 - clusters/common/app-contents/dev-namespace

33 - clusters/common/app-contents/test-scan-namespace

34 valuesFrom:

35 - secretRef:

36 name: tap-install-gitops

37 deploy:

38 - kapp:

39 intoNs: "{{request.object.metadata.name}}"

By doing this we can externalize some parameters from the input object. We can use "{{request.object.metadata.name}}" to indicate the value for intoNS

We leave the valuesFrom reference because this allows us to use a secret to populate values. For example we may need to populate a CA and we can do so from the value within the secret. [Grype Config Map] (https://github.com/tanzu-end-to-end/tap-gitops-reference/blob/main/clusters/common/app-contents/test-scan-namespace/gryp-ca-configmap.yaml_

Using this approach it is also possible to change the behavior by pointing to different git repos based on the value provided in the purpose label that we marked as required in the require-ns-purpose-label Cluster Policy.

Conclusion

In summary, Kyverno emerges as a valuable tool for Kubernetes users seeking an efficient, flexible, and secure policy management solution. Its Policy as Code approach, dynamic enforcement, and community support make it a compelling choice for organizations aiming to enhance their Kubernetes deployment's reliability and security.

Kyverno allows for:

-

Policy as Code (PaC): Kyverno allows the definition of Kubernetes policies using code, promoting a "policy as code" approach. This enables version control, collaboration, and easier management of policies alongside the application code.

-

Dynamic Policy Enforcement: Kyverno dynamically enforces policies in real-time as resources are created or updated in the Kubernetes cluster. This ensures that policies are consistently applied, reducing the risk of misconfigurations and security vulnerabilities.

-

Simplified Policy Management: Kyverno simplifies policy management by providing a centralized and declarative approach. Policies can be defined, reviewed, and audited easily, contributing to better governance and operational efficiency.