Kubernetes and Google Cloud SQL

This page was converted from my old blog and hasn't been reviewed. If you see an error please let me know in the comments.

Cloud SQL is a hosted SQL database similar to Amazon RDS for either Mysql or Postgres databases. It supports automated management including backup and deployment. Since the database is created via the the GCP console it is very easy to create a scalable and reliable database.

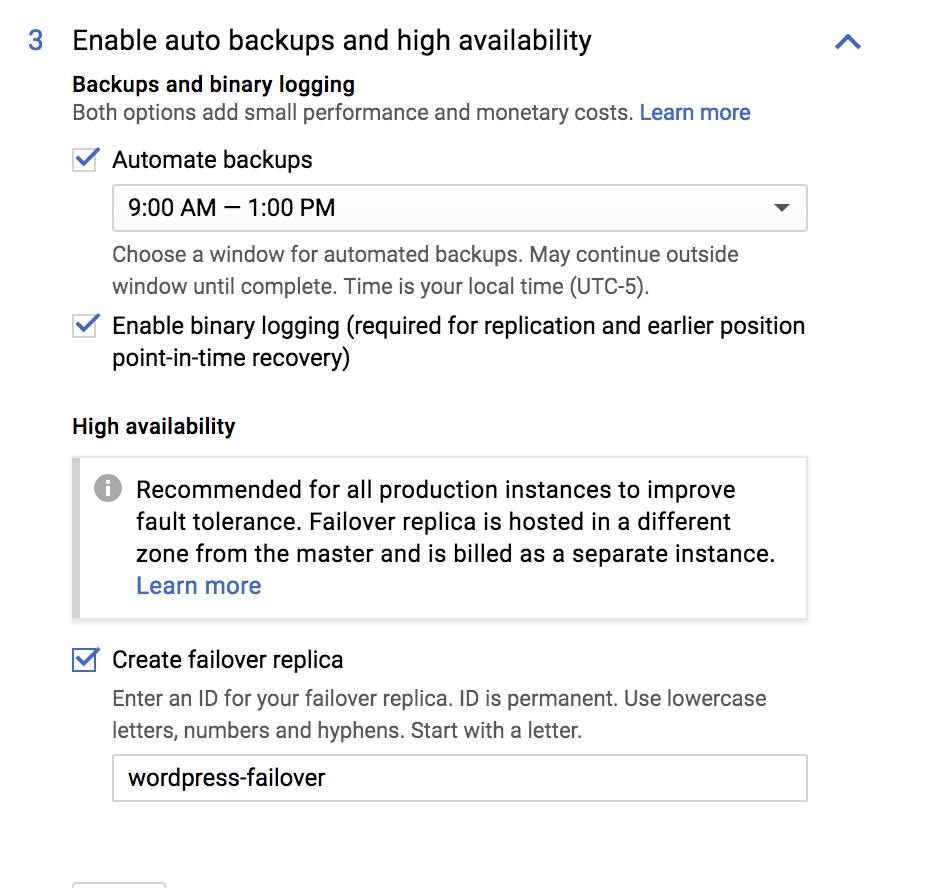

To create a new Database use the GCP Console. If you wish to have failover make sure and enable that option while creating the database.

In order for clients to be able to access the database an ingress rule must be created. Unfortunately GCP only allows the configuration of external IP addresses for ingress into Cloud SQL. In order to allow your Kubernetes cluster to be able to access the database you would need to assign routable external IPs and add them to the ingress rules for the database.

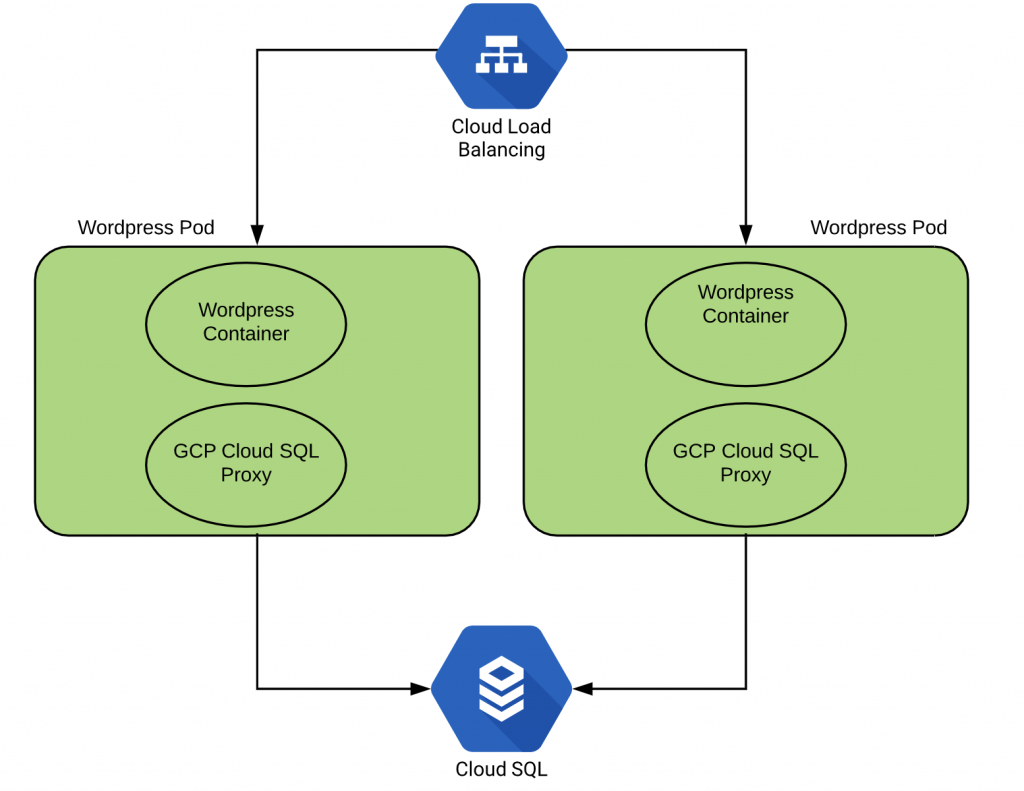

To get around this issue Cloud SQL Proxy can be used. The Cloud SQL Proxy provides secure access to your Cloud SQL Second Generation instances without having to whitelist IP addresses or setup SSL tunneling.

The steps to setup the Cloud SQL Proxy are fairly straightforward and documented here.

- Create a Service account to connect to the database.

- Create a new user to access the database

- Create the Secrets for the database

- Update the Pod configuration file.

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: wordpress

labels:

app: wordpress

spec:

template:

metadata:

labels:

app: wordpress

spec:

containers:

- name: web

image: wordpress:4.8.2-apache

ports:

- containerPort: 80

env:

- name: WORDPRESS_DB_HOST

value: 127.0.0.1:3306

# These secrets are required to start the pod.

# [START cloudsql_secrets]

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

name: cloudsql-db-credentials

key: username

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: cloudsql-db-credentials

key: password

# [END cloudsql_secrets]

# Change <INSTANCE_CONNECTION_NAME> here to include your GCP

# project, the region of your Cloud SQL instance and the name

# of your Cloud SQL instance. The format is

# $PROJECT:$REGION:$INSTANCE

# [START proxy_container]

- name: cloudsql-proxy

image: gcr.io/cloudsql-docker/gce-proxy:1.11

command: ["/cloud_sql_proxy",

"-instances=jeffellin-project:us-central1:wordpress=tcp:3306",

"-credential_file=/secrets/cloudsql/Labs-jellin-e4ccff43f21b.json"]

volumeMounts:

- name: cloudsql-instance-credentials

mountPath: /secrets/cloudsql

readOnly: true

# [END proxy_container]

# [START volumes]

volumes:

- name: cloudsql-instance-credentials

secret:

secretName: cloudsql-instance-credentials

- name: cloudsql

emptyDir:

# [END volumes]

This is one of the few cases where it makes sense to have more than one container within a Pod. The WordPress client application and the Cloud SQL proxy.

Apply the changes and create a service to expose the app.

kubectl expose deployment wordpress --port=8888 --target-port=80 --name=wordpress --type=LoadBalancer

Once the service has been created you should be able to access the WordPress blog at http://35.225.0.109:8888

Jeffreys-MacBook-Pro:wordpress jeff$ kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.15.240.1 <none> 443/TCP 2h wordpress LoadBalancer 10.15.246.41 35.225.0.109 8888:31265/TCP 1m