Integrating Jenkins, Tanzu Build Service and ArgoCD

This is part two in a series of post discussing how to integrate integrate Jenkins, Tanzu Build Service, and ArgoCD.

- Part 1: Iterative Development

- Part 2: Intake of Buildpack updates

- Part 3: Promoting code to production

Intake of Buildpack updates

An important feature of Tanzu Build Service is the ability to rebuild containers when operating system or runtimes such as Java become available.

This is a fairly simple process when your container fleet only conists of one or two images. However as the use of containers grows there may be dozens if not hundres of containers that must be rebuilt on a fairly frequent basis.

The rate of that fixes become available will result in never ending toil for teams to rebuild containers. If this process is not automated it risks consuming the teams time and thus they are not free to develop new features to add business value.

Importing a new Buildpack or Stack

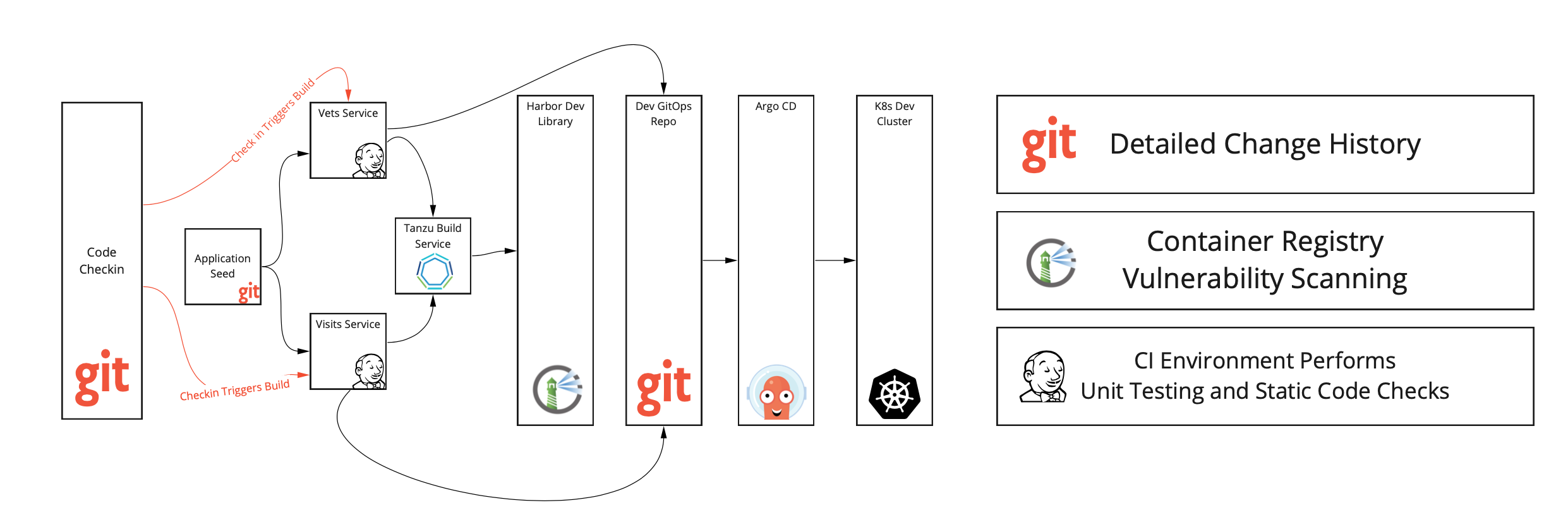

The above diagram depicts the flow when a developer commits new code.

- An update to the Tanzu Build Service artifacts, including Buildpacks, ClusterBuilders and ClusterStacks is uploaded to the build server.

- Each image that The Build Service is tracking will automatically be rebuilt and push to an image repository.

- Since we are using Harbor the image can now be scanned for vulnerabilities using Trivy or Clair. If the image passes muster a signal is sent to Jenkins via a webhook to tell it to update the kustomize manifest. While these changes could go directly to production, most organizations will want to stage them in a pre prod environment or use a progressive or canary rollout to test them.

- ArgoCD applies the latest configuration to the desired clusters. If needed the change can be rolled back using ArgoCD or reverting a commit to the GitOps repo.

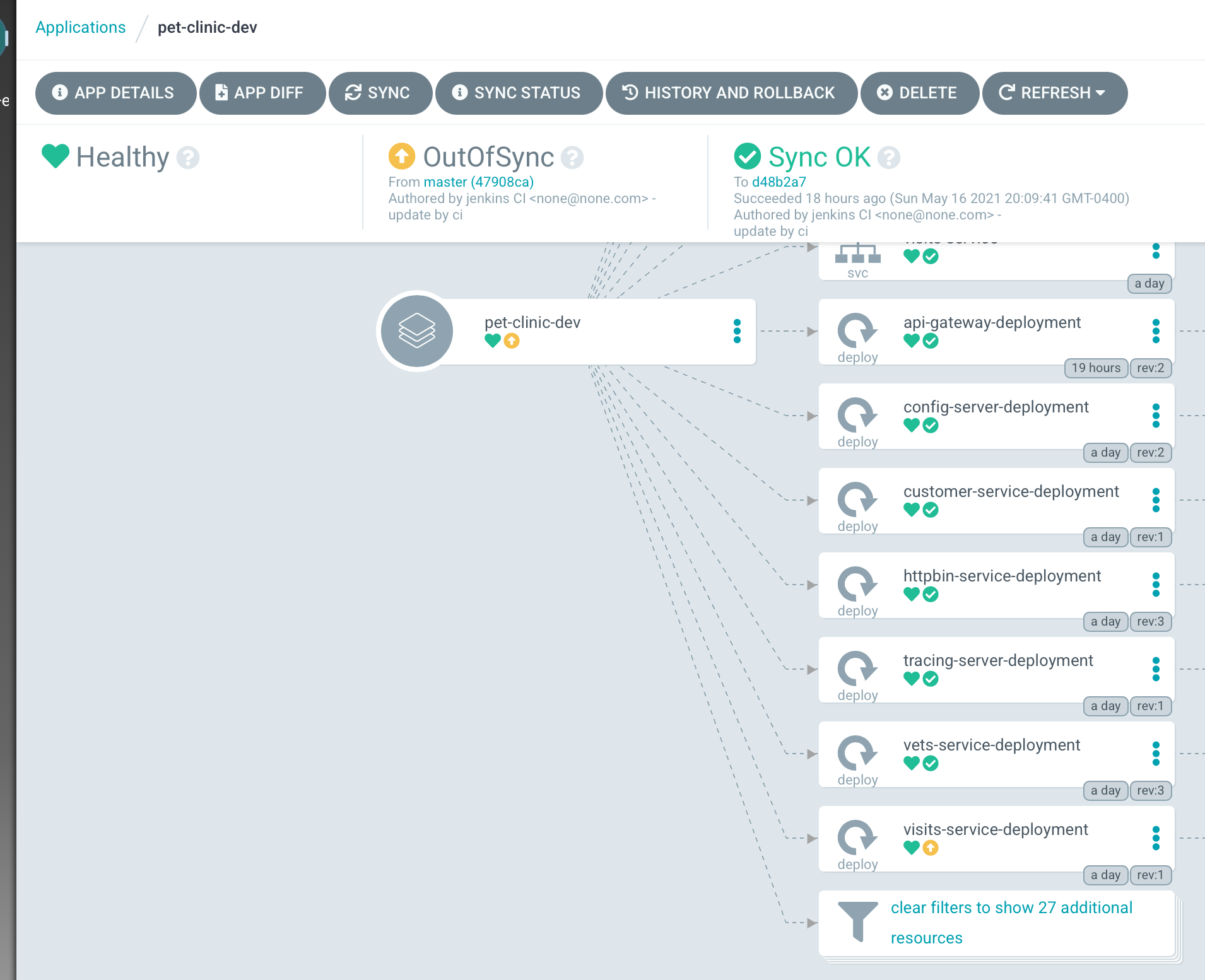

Argo Out of Sync

Any differences between the GIT Baseline and what is deployed by Argo are reconciled automatically. Logging in Argo will show when and why artifacts changed.

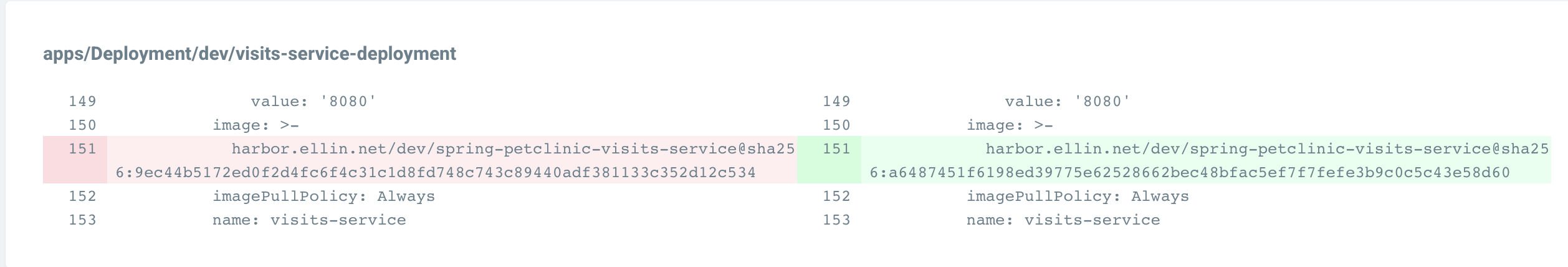

Argo visits service changed

Implementing This.

Although any continuous delivery tool could be used to implement this workflow, I chose Jenkins for its ubiquity. If you want to follow along and run my pipelines, you will want to make sure you have Jenkins configured to run builds inside Kubernetes containers.

Jenkins Prerequisites

For this flow to work we will need a few extra Jenkins plugins in addition to the ones listed in the previous post. **TODO Need correct names

- generic-web-hook:1.29.2

- script utils

As before I have included the versions of the plugins I am using. The Jenkins plugin ecosystem is very volatile, so version numbers may vary for you over time.

App Seed

In the Spirit of Configuration as Code, I have a groovy script that is used to seed jobs within Jenkins. Adding a new Job is as simple as adding a new entry to the script and committing the changes to GIT. In the code below, I have the name of the project and the name of the pipeline file that should be used to build the result. Since all my apps are Spring Boot, they all can use the same pipeline. A pipeline file is nothing more than a Groovy script that instructs Jenkins on executing a job.

1def apps = [

2 'spring-petclinic-vets-service': [

3 buildPipeline: 'ci/jenkins/pipelines/spring-boot-app.pipeline'

4 ],

5 'spring-petclinic-api-gateway': [

6 buildPipeline: 'ci/jenkins/pipelines/spring-boot-app.pipeline'

7 ],

8 'spring-petclinic-visits-service': [

9 buildPipeline: 'ci/jenkins/pipelines/spring-boot-app.pipeline'

10 ],

11 'spring-petclinic-httpbin': [

12 buildPipeline: 'ci/jenkins/pipelines/spring-boot-app.pipeline'

13 ],

14 'spring-petclinic-config-server': [

15 buildPipeline: 'ci/jenkins/pipelines/spring-boot-app.pipeline'

16 ]

17]

18

19

20apps.each { name, appInfo ->

21

22

23 pipelineJob(name) {

24 description("Job to build '$name'. Generated by the Seed Job, please do not change !!!")

25 environmentVariables(

26 APP_NAME: name

27 )

28 definition {

29 cps {

30 script(readFileFromWorkspace(appInfo.buildPipeline))

31 sandbox()

32 }

33 }

34 triggers {

35 scm('* * * * *')

36 }

37 properties{

38 disableConcurrentBuilds()

39 }

40 }

41}

The complete script is available here

The Pipeline

The pipeline implements several stages.

- Fetch from GitHub

- Create Image

- Update Deployment Manifest

All of these steps are performed in a clean docker container as defined in the pod template.

1apiVersion: v1

2kind: Pod

3metadata:

4 labels:

5 app.kubernetes.io/name: jenkins-build

6 app.kubernetes.io/component: jenkins-build

7 app.kubernetes.io/version: "1"

8spec:

9 volumes:

10 - name: secret-volume

11 secret:

12 secretName: pks-cicd

13 hostAliases:

14 - ip: 192.168.1.154

15 hostnames:

16 - "small.pks.ellin.net"

17 - ip: 192.168.1.80

18 hostnames:

19 - "harbor.ellin.net"

20 containers:

21 - name: k8s

22 image: harbor.ellin.net/library/docker-build

23 command:

24 - sleep

25 env:

26 - name: KUBECONFIG

27 value: "/tmp/config/jenkins-sa"

28 volumeMounts:

29 - name: secret-volume

30 readOnly: true

31 mountPath: "/tmp/config"

32 args:

33 - infinity

Since our pod needs access to a remote Kubernetes cluster, I have mounted a service account KUBECONFIG into the pod as a secret.

- Fetch from GitHub

1steps {

2dir("app"){

3git(

4poll: true,

5changelog: true,

6branch: "main",

7credentialsId: "git-jenkins",

8url: "git@github.com:jeffellin/${APP_NAME}.git"

9)

10sh 'git rev-parse HEAD > git-commit.txt'

11}

12}

13}

- Create an Image with TBS. Use the

-wflag to wait until the build is complete.

1stage('Create Image') {

2steps {

3container('k8s') {

4sh '''#!/bin/sh -e

5export GIT_COMMIT=$(cat app/git-commit.txt)

6kp image save ${APP_NAME} \

7--git git@github.com:jeffellin/${APP_NAME}.git \

8-t harbor.ellin.net/dev/${APP_NAME} \

9--env BP_GRADLE_BUILD_ARGUMENTS='--no-daemon build' \

10--git-revision ${GIT_COMMIT} -w

11'''

12}

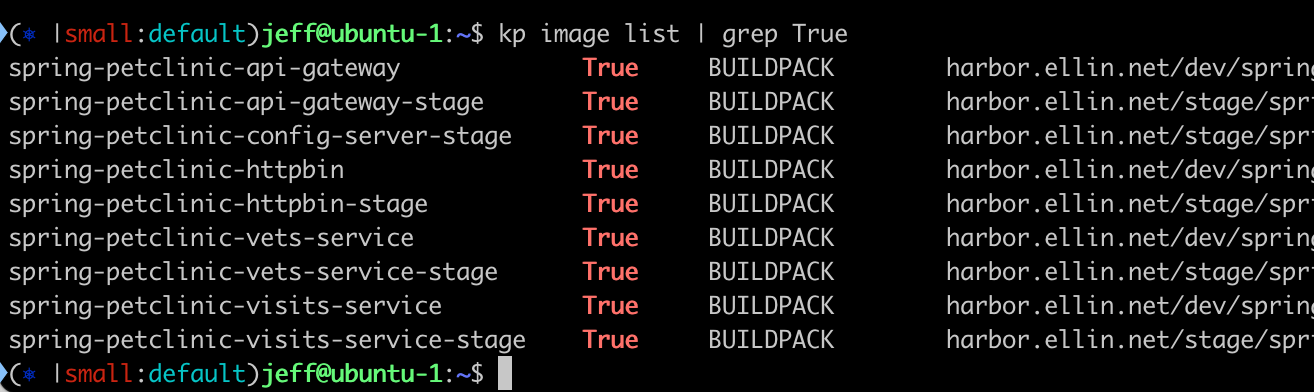

Images Monitored by TBS

- Update Deployment Manifest

Since we use Kustomize to maintain our deployment versions. We update the kustomization.yaml using kustomize itself.

1stage('Update Deployment Manifest'){

2steps {

3container('k8s'){

4dir("gitops"){

5git(

6poll: false,

7changelog: false,

8branch: "master",

9credentialsId: "git-jenkins",

10url: "git@github.com:jeffellin/spring-petclinic-gitops.git"

11)

12}

13sshagent(['git-jenkins']) {

14sh '''#!/bin/sh -e

15

16kubectl get image ${APP_NAME} -o json | jq -r .status.latestImage >> containerversion.txt

17export CONTAINER_VERSION=$(cat containerversion.txt)

18cd gitops/app

19kustomize edit set image ${APP_NAME}=${CONTAINER_VERSION}

20git config --global user.name "jenkins CI"

21git config --global user.email "none@none.com"

22git add .

23git diff-index --quiet HEAD || git commit -m "update by ci"

24mkdir -p ~/.ssh

25ssh-keyscan -t rsa github.com >> ~/.ssh/known_hosts

26git pull -r origin master

27git push --set-upstream origin master

28'''

29}

30}}}

All of these steps run within the "k8s" container, which was pulled from Harbor.

harbor.ellin.net/library/docker-build

This image is based on the following Dockerfile.

1FROM docker:dind

2

3ENV KUBE_VERSION 1.20.4

4ENV HELM_VERSION 3.5.3

5ENV KP_VERSION 0.2.0

6RUN apk add --no-cache ca-certificates bash git openssh curl jq bind-tools subversion git-svn \

7 && wget -q https://storage.googleapis.com/kubernetes-release/release/v${KUBE_VERSION}/bin/linux/amd64/kubectl -O /usr/local/bin/kubectl \

8 && chmod +x /usr/local/bin/kubectl \

9 && wget -q https://get.helm.sh/helm-v${HELM_VERSION}-linux-amd64.tar.gz -O - | tar -xzO linux-amd64/helm > /usr/local/bin/helm \

10 && chmod +x /usr/local/bin/helm \

11 && chmod g+rwx /root \

12 && mkdir /config \

13 && chmod g+rwx /config \

14 && helm repo add "stable" "https://charts.helm.sh/stable" --force-update

15

16RUN wget https://github.com/vmware-tanzu/kpack-cli/releases/download/v${KP_VERSION}/kp-linux-${KP_VERSION}

17RUN mv kp-linux-${KP_VERSION} /usr/local/bin/kp

18RUN chmod a+x /usr/local/bin/kp

19

20RUN curl -s "https://raw.githubusercontent.com/kubernetes-sigs/kustomize/master/hack/install_kustomize.sh" | bash

21RUN mv kustomize /usr/local/bin

22

23#ADD ca.crt /usr/local/share/ca-certificates

24#RUN update-ca-certificates

25WORKDIR /config

26

27CMD bash

It's a standard docker image with some utilities that we commonly need to use.

- bash

- git

- openssh

- curl

- jq

- helm

- kubectl

- kustomize

The complete script is available here

All the source code for the pet-clinic and its deployment are available on GitHub

In the following article, I will include an example of how to automate promoting artifacts to a stage/prod environment using GitOps.